Intelligent machines take off - Generative artificial intelligence (GenAI) brings potential technological changes to the aviation industry

How will generative artificial intelligence (GenAI) adapt to the future aviation sector, and where will its compliance be achieved? Irene Ruiz-Gabernet, Airbus UK Military Safety and Compliance Director and Fellow of the Royal Aeronautical Society, shared her views on the promising future of this field.

Driven by artificial intelligence, the aviation industry is on the eve of a revolution. Although human intervention is still irreplaceable in terms of creativity, judgment, intuition and context, GenAI can still contribute many valuable outputs. It can write detailed reports, transform product ideas into unique designs, and play a catalyst role in enhancing productivity and innovation.

The impact of this revolutionary technology on the economy will be staggering. McKinsey's review "The Economic Potential of Generative AI: The Next Frontier of Productivity" predicts that productivity will see a significant increase of 24.5% to 58.5%. This improvement will be particularly evident in the areas of decision-making, planning, and coordination.

AI can use its expertise in decision-making processes, planning and task planning to revolutionize the way we work and restructure job requirements, freeing people from heavy and repetitive mental work so that they can focus on dealing with strategic and more pressing issues.

Understanding the key

concepts

GenAI also has capabilities such as retrieval-enhanced creation (creating chatbots), summarization (summarizing information), content generation (creating text), named entity recognition (extracting key information from text), insight extraction (analyzing unstructured content to express insights), and classification (reading/classifying written input with minimal examples). At its core is the concept of basic models, large language models like ChatGPT, which are self-trained through extensive data collection. This allows them to do many low-level tasks.

Benefits over predictive ML

Advantageous Projects

Predictive machine learning requires building and training a model for each creation, which requires considerable human intervention.

In contrast, GenAI uses a large number of basic multi-task models that can process large amounts of external data with little or no training. Through instructions, we can let the model handle specified tasks, and then fine-tune the model with other technologies, so that it can process proprietary enterprise data and generate high-quality customized content.

The regulatory landscape

Regulatory Outlook

EASA’s “Artificial Intelligence Roadmap 2.0” and “Conceptual Discussion: The First Practical Level 1 and Level 2 Machine Learning Application Guidelines” plan the use of AI tools from a safety perspective and an environmental protection perspective. These critical questions are answered in the documents, which point the way for the application of AI in the aviation industry.

All safety or environment-related AI applications under the EASA basic regulation (Regulation (EU) 2018/1139) will be affected, including the following application scenarios:

- Initial and continuing airworthiness: systems and equipment applicable to type certification and operational regulations

- Maintenance: Includes tools for planning and implementing timely detection and prevention of unsafe situations

- Operations: covers systems, equipment or functions that support, supplement or replace the tasks of the crew or other operating personnel

- Air Traffic Control/Air Navigation Services: Applies to equipment intended to support, supplement or replace end-user tasks related or not to air traffic services

- Training: covers systems used to test training efficiency or support organizational management to ensure compliance and safety

- Airports: Includes systems for automating critical airport operations services such as identifying foreign contaminants, monitoring bird activity, and identifying drones at or near airports

- Environmental protection: includes systems or equipment that affect the ecological environment characteristics of the product

Trustworthy building blocks

Regulatory basis

It sets goals for each section and provides criteria to clarify the expectations behind those goals

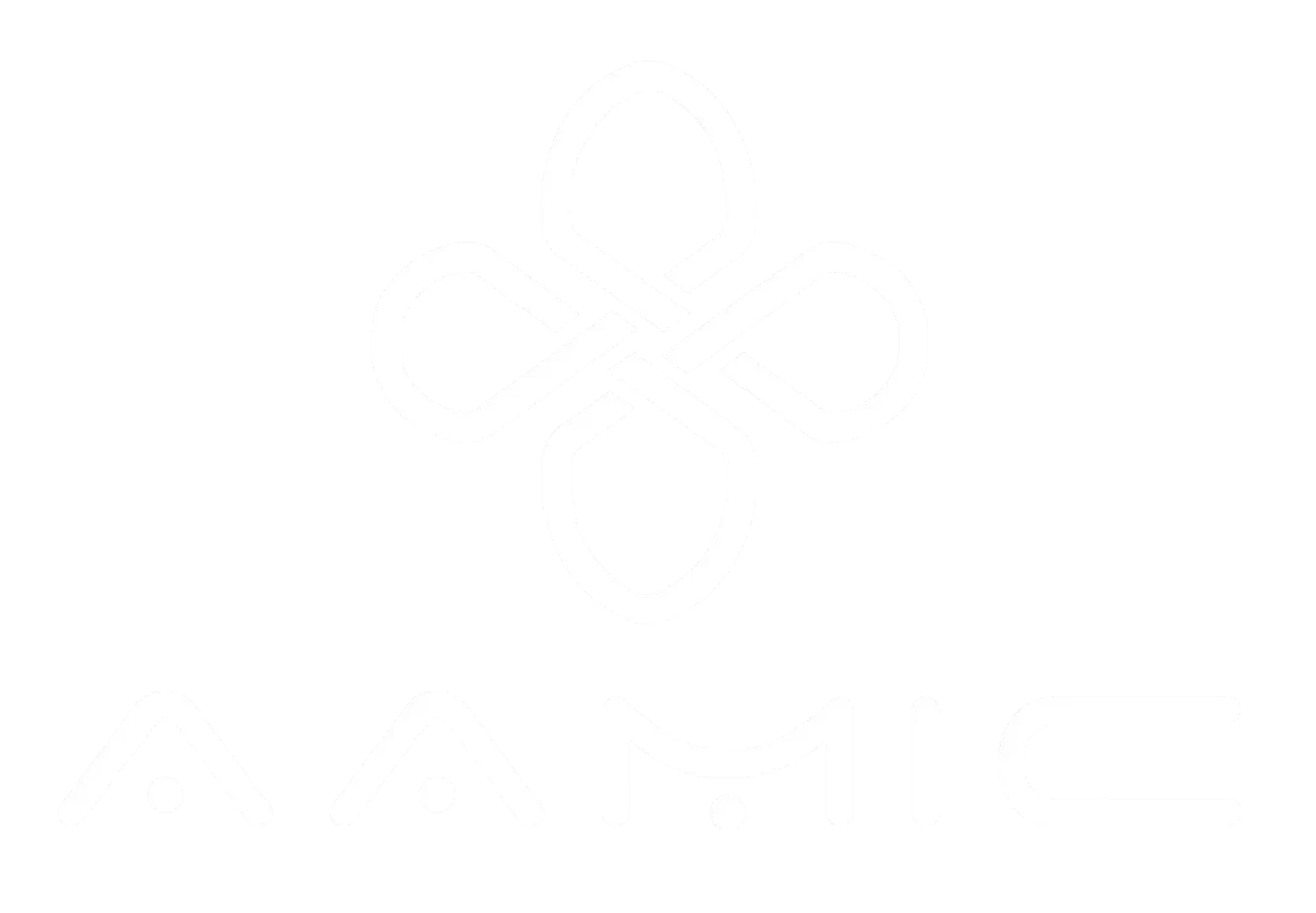

The first part is related to the European Community Code of Ethics and is the premise of the other three aspects. It evaluates the characteristics of AI in terms of application, safety, security and ethics. This part divides AI systems into three levels. The higher the level of AI system, the lower the user's control and the more AI will intervene in the work. EASA's classification method is also consistent with the method adopted by the AI application industry

The second part looks at how to train AI systems to ensure that they produce readable content. The third part talks about the relationship between AI and human factors, and provides guidance on human factors to consider when using AI. Finally, the fourth part is dedicated to reducing the safety risks of AI and promptly identifying risks caused by the inherent uncertainty of other AIs

Criteria of proportionality

Metrics

- AI level: As mentioned in the first part of EASA’s standard, analyze the degree of intervention of AI systems

- AI system criticality level: As EASA points out in Part 2, it emphasizes ensuring that AI-based system outputs have confidence

Given the criticality of existing AI systems and the level of knowledge about them, EASA determines the use restrictions of AI models based on the accuracy they can guarantee. The guidelines state: "Depending on its criticality in the aviation field, each AI system needs to be evaluated at a graded level (such as development-level evaluations related to initial and continuing airworthiness or evaluations of air traffic control/navigation service software)”

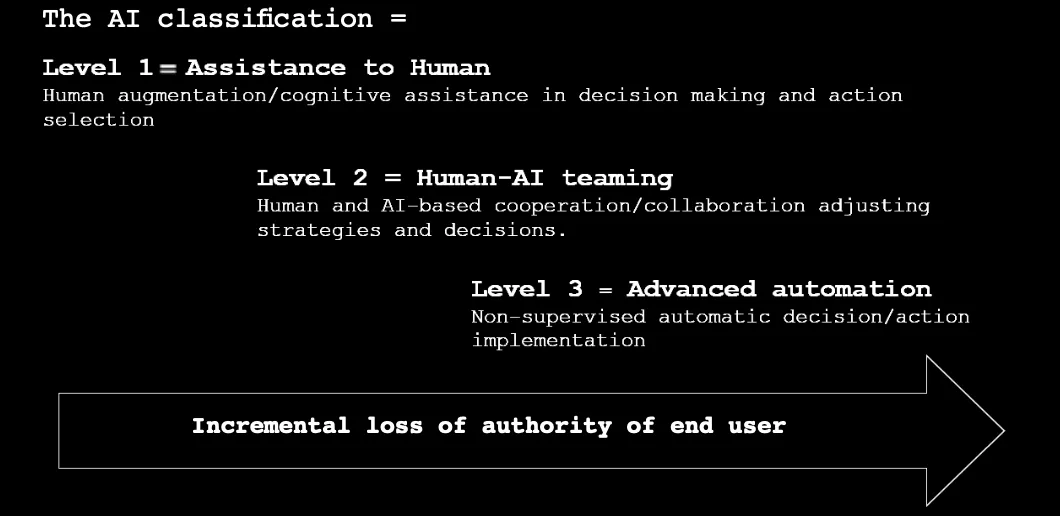

For example, for initial and continuing airworthiness, the assessment level may range from catastrophic (DAL A) to minimal impact (DAL D), depending on the failure safety margin of the AI application

Risk-based levelling of objectives

Risk Rating

We can apply this risk-based compliance assessment to this example:

Imagine an AI system that can generate maintenance plans based on the operator's maintenance plan. After training, this model can analyze existing data and schedule scheduled inspections at the most appropriate time. In this case, if it is only used to support the analysis and decision-making work of maintenance plan engineers, it can be classified as Level 1: Human Capability Augmentation

If we analyze its criticality, it can be classified as DAL A (Design Confidence Level A). This is because if there is a problem with the AI system, it may lead to omissions in inspection, which may lead to catastrophic consequences over time. So from two aspects, this system can be rated as follows:

- AI system level: Level 1 - The system only assists maintenance plan engineers in analyzing data and making decisions, such as fleet scheduling, hangar slots, resource acquisition, etc.

- Criticality level: DAL A - If this system fails, it may lead to inspection omissions, which may have serious consequences in the future. As mentioned above, each part of the EASA guidance contains a series of goals. When the applicant conducts self-inspection, the requirements of each goal must be met or at least achieved

EASA roadmap

Route Planning

EASA is also planning to adjust the timeline based on the opinions of the industry, universities and other institutions. Limited artificial intelligence and machine learning have also been initially used in the certification of civil aircraft

Most practitioners are committed to achieving the use of Level 1 AI (human enhancement) around 2025, and plan to achieve Level 2 AI (augmented automation) for civil aviation in 2035. Level 3A (supervised advanced automation) and 3B (full automation) are expected to be practical between 2035 and 2050

The aviation industry is at the forefront of change, and AI is the leader of change. As a leader in productivity change, GenAI brings unlimited possibilities in enhancing human creativity on a large scale

It brings unprecedented opportunities to the aviation industry in terms of safety, smooth operation and creativity. And building reliance on AI systems and technology is the foundation of everything